On Wednesday morning, the U.S. Senate Committee on Commerce, Science, and Transportation held a hearing on mass violence, extremism, and digital responsibility. The purpose of the hearing was to examine the proliferation of extremism online and examine the effectiveness of social media companies’ efforts to remove violent content from their platforms. The senators heard from representatives of Facebook, Twitter, Google, and the Anti-Defamation League.

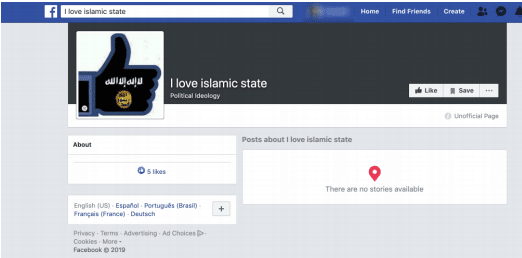

However, a whistleblower working with the National Whistleblower Center filed a petition in January 2019 with the Securities and Exchange Commission (SEC) contradicting this. The petition shows that Facebook not only hosts terror and hate content, but it has also auto-generated dozens of pages in the names of Middle East extremist and U.S. white supremacist groups, thus facilitating networking and recruitment.

The Associated Press first reported on this story in May 2019. In the four months since, Facebook has appeared to make little progress on the issue of auto-generation and many of the pages referenced in the report were only removed more than six weeks later—the day before Bickert was questioned before a House of Representatives subcommittee.

Yesterday, the whistleblower filed a supplement to the SEC petition demonstrating that Facebook is still auto-generating terror and hate content and that users’ pages promoting extremist groups remain easy to find with simple searches. The Associated Press contacted Facebook about its latest auto-generated pages and it claimed that “it cannot catch every one,” as if Facebook’s own auto-generation feature was beyond its control.

Throughout the Senate hearing, Bickert repeated a statistic that 99% of content Facebook removes is flagged by their technical tools rather than user reports. However, this statistic exaggerates the success of the company in combating extremist messaging.

The more relevant question, not addressed by Facebook, is: what percentage of terror and hate content created by others is not being removed from its website and how much new terror and hate content is Facebook itself creating? As George Selim of the Anti-Defamation League testified at the hearing, social media companies need to become “much more transparent about the prevalence and nature of hate on their platforms.”